More Factoidal Facts

by Brian Hayes

Published 3 June 2007

Anthony G. Pakes of the University of Western Australia shines further light on the “factoidal” function, discussed earlier here on bit-player and in the May-June issue of American Scientist:

I found your article about factoid(n) very interesting, and I offer you some analytically derived facts about it.

I will denote factoid(n) by f(n). You probably realize that 2f(2) is the payout in the St Petersberg game, i.e. the payout if tossing a fair coin repeatedly shows the first head on toss number n. Its probability law is exactly known, so there is not much more to say about it.

For n=2, 3, …, there is a critical number tn such that 1 = t2 < t3 < t4 < …, and the moment of order t of f(n) is finite iff t < tn. I have an explicit formula for this moment. I believe that, for large n, tn is asymptotically proportional to 1/n log(n) (natural logs), but my ‘proof’ of this is not entirely rigorous.

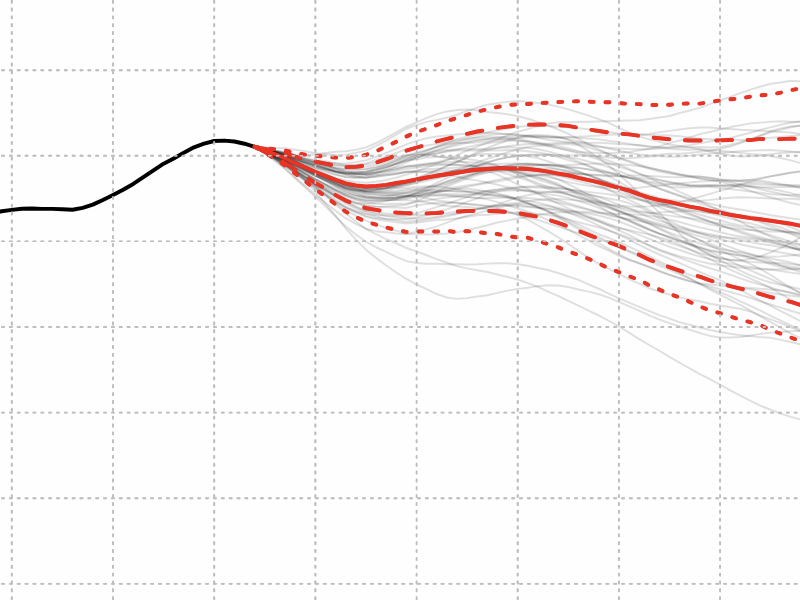

The distribution tail of f(n) is indeed fat; it decays in proportion to tn–x. I have values of the constants of proportionality. In this respect the distribution of f(n) is similar to members of the stable domain of attraction with index tn. Also, it is nothing like a lognormal law, which has finite moments of all orders.

There is a limit law too: log(f(n))/n log(n) converges in distribution to the standard exponential law. This confirms your remarks about the probability of f(n) being bigger than n!. It does indeed converge to 1/e. In fact the probability that f(n) > (n!)x converges to exp(–x).

A couple of general remarks. There is a book devoted to Products of Random Variables: by J Galambos & I Simonelli, Marcel Dekker Inc., (2004). The stopping rule defining f(n) is quite different to the model considered by Reed and Hughes; in their case it is independent of their summand random variables. For f(n) it is defined by its random factors, although it is a stopping time. Finally, f(n) is a function. For each n, it is a function defined on some sample space which could be made explicit, but almost never is. Besides being a random variable, it differs from n! in that f(n+1) cannot be computed from f(n) simply by multiplying by another random variable; changing n changes the probability law of the factor random variables. The situation is very similar to triangular arrays studied in the general summation theory of independent random variables, infinite divisibility and related stuff.

Publication history

First publication: 3 June 2007

Converted to Eleventy framework: 22 April 2025